Data Warehouse

Data Warehousing &

Data Modelling

Which do you choose? Kimball, Inmon, DataVault or a combination. We can help you implement the right data architecture & strategy for your business and enable you to develop your Data Warehouse using agile methodologies.

Data Warehousing & ETL allows you to bring all your organisation’s disparate data together in one place, a single point for the truth.

Data Warehouses are often associated with Business Intelligence (BI) tools and reporting systems. For Example, Power BI, SSRS (SQL Server Reporting Services).

Data Warehouses are scalable from the smallest to the most significant business sizes and gives the benefit of being able to create in-depth analytics and rich reports. All safe in the knowledge that they do not impact the performance of your operational systems.

The technology landscape is continually evolving, keeping up to date with trends, regulations, and latest practices can seem a fulltime job, not to mention all the jargon and terminology.

With additional concerns around GDPR, data provenance, lineage and privacy of data, you need a solution which covers all the bases. Our in-depth understanding of these technologies means we do all the hard work, so you don’t have too.

Data Warehousing Architectures

Kimball – Datamart

Ralph Kimball (retired) is an author on the subject of data warehousing and business intelligence. He is one of the original and first architects of data warehousing. His methodology, also known as dimensional modelling or the Kimball methodology, is the de-facto standard in the area of decision support systems.

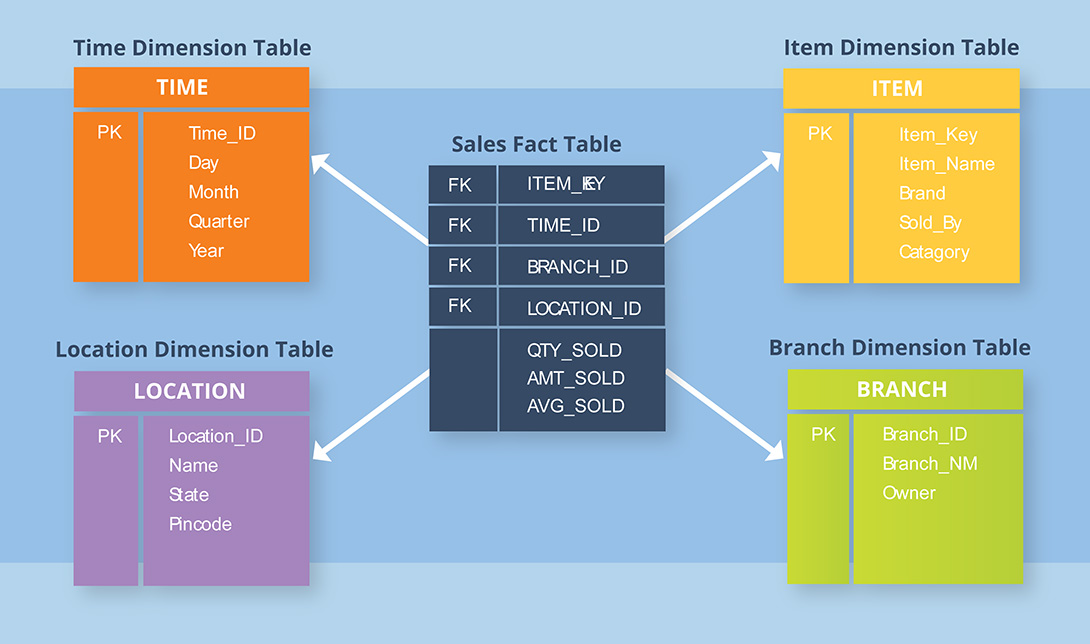

You have probably heard of the star schema methodology. It was Introduced in 1996 by Ralph Kimball, and nearly 30 years later it is still relevant today. Further ready here:- https://www.kimballgroup.com/1997/08/a-dimensional-modeling-manifesto/ Kimball is a bottom-up approach to data warehousing, meaning that the data warehouse is created as an amalgamation after the specific business area’s data are setup. The data marts that facilitate reports and analysis are combined to create a Data Warehouse.

The Dimensional Modelling approach means that the initial setup is relatively easy for the different business areas which have created data marts; however, maintenance of this can be difficult. There may be several revisions to manage, and data and duplicate business rules may exist between the departments.

Inmon – Corporate Information Factory

Bill Inmon is an American computer scientist, referred to by many as the father of the data warehouse. Inmon wrote the first book, held the first conference (with Arnie Barnett), wrote the first column in a magazine and was the first to offer classes in data warehousing.

Inmon created the accepted definition of what a data warehouse is – a subject-oriented, non-volatile, integrated, time-variant collection of data in support of management decisions.

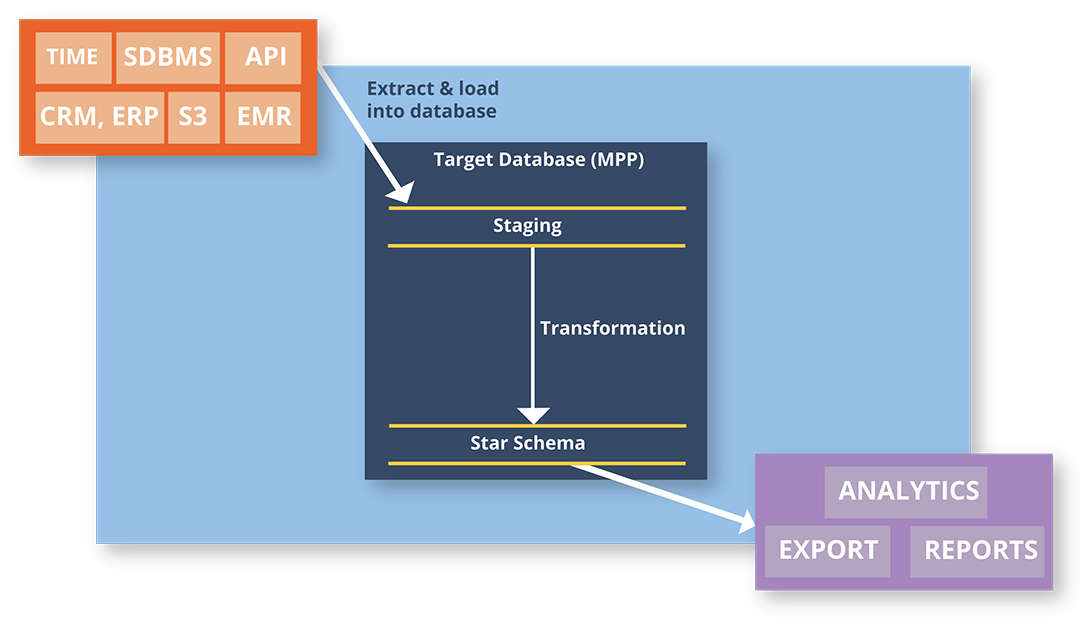

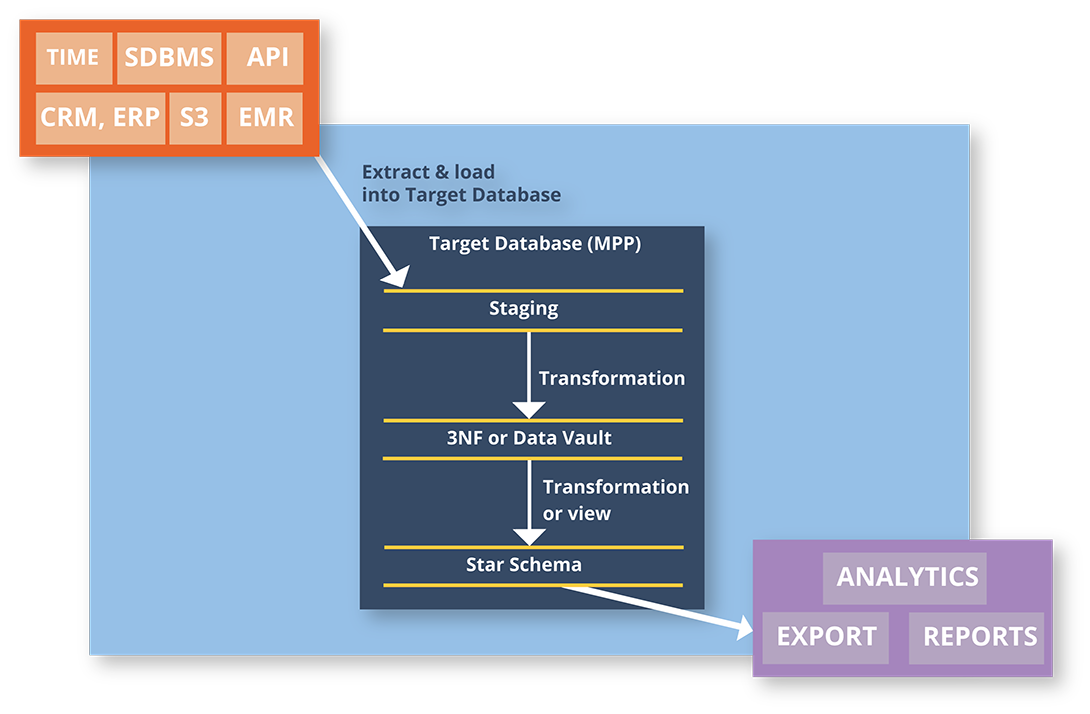

Compared with the approach of the other pioneering architect of data warehousing, Ralph Kimball, Inmon’ s approach is top-down.

Data from all source systems go through ETL processes to the central Data Warehouse in and becomes the single point of truth for the business. All downstream decision support systems feed off the Data Warehouse.

There are many advantages to this approach, the most important of which is a line of demarcation between internal & external systems, 3rd party data. If the sources change then only the ETL process feeding the Data Warehouse needs to change; all downstream systems will be unaware of the changes. You effectively decouple the BI and the Analytics platforms from the source data.

Dan Linstedt – Data Vault 2.0

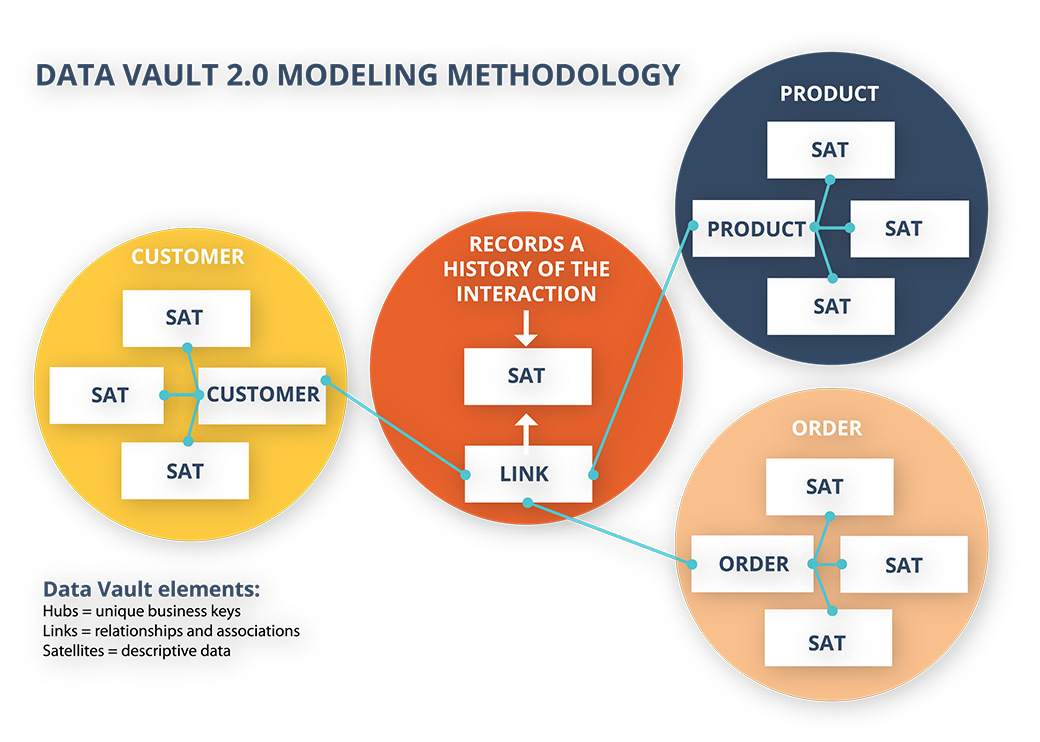

The Data Vault 2.0 is an alternative model for both Kimball and Inmon

Data vault modelling is a database modelling method that has a design that provides long-term and historical storage of data coming in from various systems.

Data Vault can help with :

- Auditing issues.

- Data tracing

- Loading speed.

- Resilient to changes in where the data is stored is coming from.

A Data Vault stores “a single version of the facts” as expressed by Dan Linstedt “all the data, all of the time”.

In other data warehouse architectures, the storing a single version of the truth means that data that does not meet standards are removed or cleansed.

The design methodology of Data Vault 2.0 enables parallel loading as much as possible so that Big Data implementations can scale up and out without the need for a significant redesign.

Data Vault is best suited to Big Data Environments where the mix of streaming, structured and unstructured data is prevalent, and focus is on performance at scale.

Data Lakes

A data lake is usually a single store of all enterprise data, including raw copies of source system data and transformed data used for tasks such as reporting, visualisation, advanced analytics and machine learning.

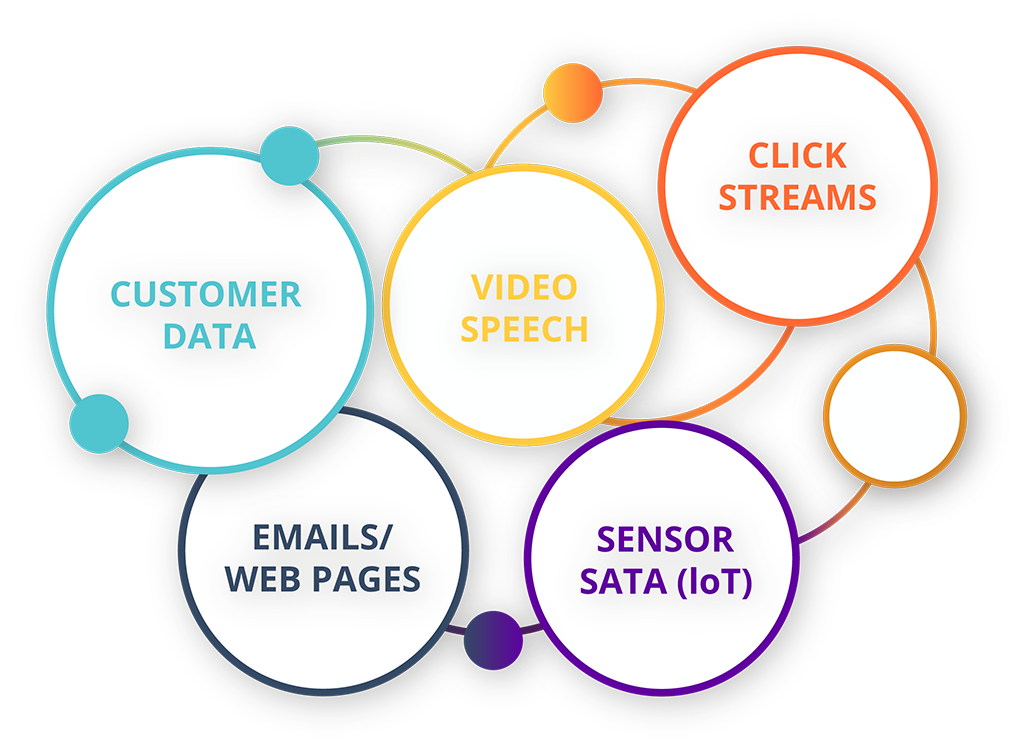

A data lake can include structured data from relational databases, semi-structured data, unstructured data and binary data.

You can establish a data lake “on-premises” or “in the cloud”. It is more typical to implement Data Lakes in the cloud.

Data lakes usually store massive amounts of data where traditional storage techniques will not handle the volumes of data.

Also, Data Lakes are useful in complex organisations where many different data sources exist and which are not necessarily related to each other but require storage for later processing.

Data stored in files & blobs in a Data Lake can lose metadata (data about the data). For example, business rules, validation requirements, data types are lost when storing information as a text file.

How do Data Lakes work?

Delta Lake

Find out more here:- https://delta.io/

How can we help?

- Current BI Review

- Proof of Concept Projects

- Design Scoping

- Data Modelling Development

- Data Integration & ETL (Extract, Transform & Load)

- Power BI Report Designing

- Microsoft Azure Implementation & Management

- Experience in Finance, Manufacturing, Healthcare, Retail & Charity sectors.